On the other hand, to understand this section in more depth, you will need to review topcis in the chapter on foundations and in the sections on stochastic processes and stopping times.

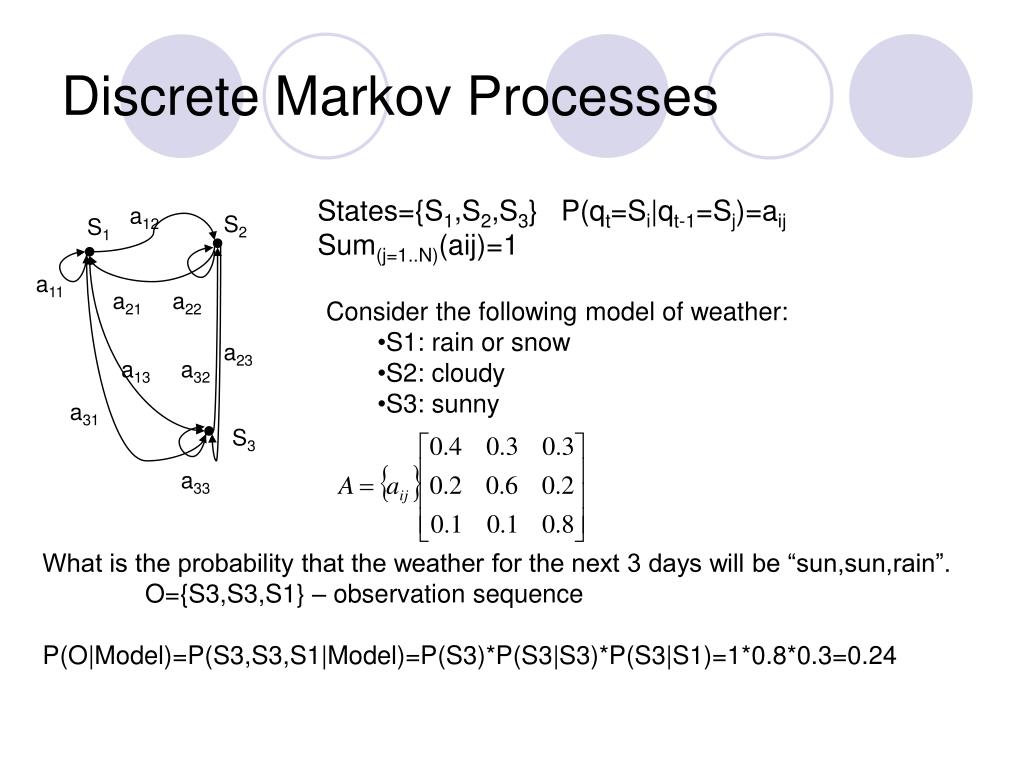

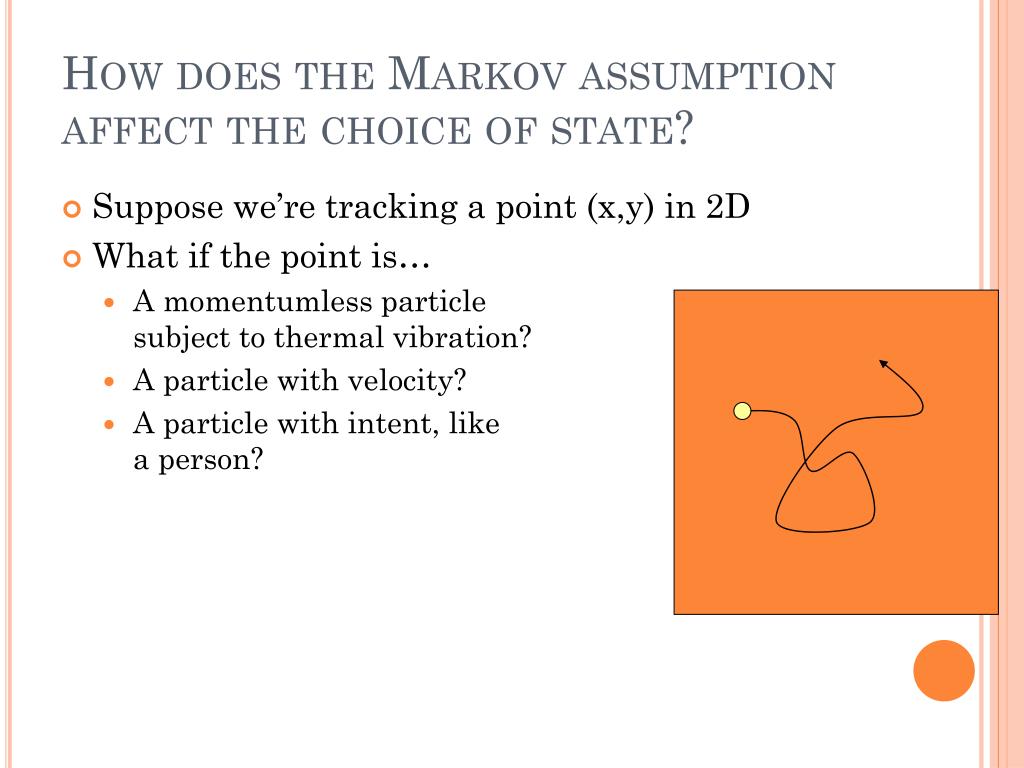

Then jump ahead to the study of discrete-time Markov chains. If you are a new student of probability you may want to just browse this section, to get the basic ideas and notation, but skipping over the proofs and technical details. Some of the statements are not completely rigorous and some of the proofs are omitted or are sketches, because we want to emphasize the main ideas without getting bogged down in technicalities. The goal of this section is to give a broad sketch of the general theory of Markov processes. Generally, such processes can be constructed via stochastic differential equations from Brownian motion, which thus serves as the quintessential example of a Markov process in continuous time and space. In the case that \( T = [0, \infty) \) and \( S = \R\) or more generally \(S = \R^k \), the most important Markov processes are the diffusion processes.Such sequences are studied in the chapter on random samples (but not as Markov processes), and revisited below. When \( T = \N \) and \( S \ = \R \), a simple example of a Markov process is the partial sum process associated with a sequence of independent, identically distributed real-valued random variables.However, we can distinguish a couple of classes of Markov processes, depending again on whether the time space is discrete or continuous. In terms of what you may have already studied, the Poisson process is a simple example of a continuous-time Markov chain.įor a general state space, the theory is more complicated and technical, as noted above. The Markov property also implies that the holding time in a state has the memoryless property and thus must have an exponential distribution, a distribution that we know well. The Markov property implies that the process, sampled at the random times when the state changes, forms an embedded discrete-time Markov chain, so we can apply the theory that we will have already learned. If we avoid a few technical difficulties (created, as always, by the continuous time space), the theory of these processes is also reasonably simple and mathematically very nice. When \( T = [0, \infty) \) and the state space is discrete, Markov processes are known as continuous-time Markov chains.Discrete-time Markov chains are studied in this chapter, along with a number of special models. Indeed, the main tools are basic probability and linear algebra. The theory of such processes is mathematically elegant and complete, and is understandable with minimal reliance on measure theory. When \( T = \N \) and the state space is discrete, Markov processes are known as discrete-time Markov chains.The general theory of Markov chains is mathematically rich and relatively simple. When the state space is discrete, Markov processes are known as Markov chains. When \( T = [0, \infty) \) or when the state space is a general space, continuity assumptions usually need to be imposed in order to rule out various types of weird behavior that would otherwise complicate the theory. The complexity of the theory of Markov processes depends greatly on whether the time space \( T \) is \( \N \) (discrete time) or \( [0, \infty) \) (continuous time) and whether the state space is discrete (countable, with all subsets measurable) or a more general topological space. In a sense, they are the stochastic analogs of differential equations and recurrence relations, which are of course, among the most important deterministic processes. Markov processes, named for Andrei Markov, are among the most important of all random processes. Introduction to General Markov ProcessesĪ Markov process is a random process indexed by time, and with the property that the future is independent of the past, given the present.

0 kommentar(er)

0 kommentar(er)